Archive

Automatically signing into Yammer Embed from SharePoint Online

So Microsoft have dropped the SharePoint Yammer app and are advising you to use the Yammer embed libraries to embed your Yammer feeds in your SharePoint site. Sweet you think I can do that you set it up embed it on your page and everything works fine.

For the first day. After that you find that you just have the Yammer Login button and you have to click that and sign-in once a day, or more, to get the feeds to work.

Does this sound familiar?

Maybe you raise a ticket with Microsoft but the only answer you get back is “it is by design”, like that is any help.

Well after one, now ten, of those calls and still being told it is by design I now agree with them it is by design, and with some small tweaks you can get it to sign-in seamlessly almost all of the time. (I say almost because I’m sure there are some cases where it won’t) and surprisingly it isn’t the only service that is affected. I’ll explain. But first let me set up the environment.

The SharePoint I’m going to focus on is SharePoint Online. The licence includes Yammer Enterprise and that is also setup. Single sign-on has been setup for the Office 365 tenant. In my case, the customer in question was also using AD FS to provide a seamless sign on experience.

Happy Days

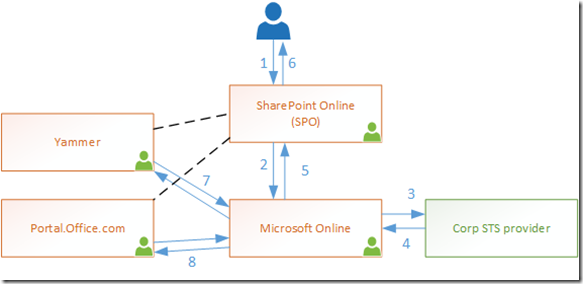

Time for a picture:

Ok. So you can see, that the happy day scenario (i.e. no cookies, first time access or similar) the flow is like this.

User hits SPO (1), it says “I don’t know you visit Login.microsoftonline.com (MSO)”.

Browser redirects to MSO (2). (at this point, perhaps after a few more redirects) the user is directed to the organisation sign-in server (Corp) (3) and the chain bubbles back with each node receiving the user identity (4 & 5) and setting a cookie so they can remember who it is.

Now the user is back in SPO the Yammer Embed attempts to load. Yammer doesn’t know who the caller is so it redirects the user to MSO (7).

MSO knows who the user is, thanks to the previous call, and returns this information to Yammer.

Yammer now displays the feed requested. This is the same, or similar, for other services that are included in the SPO display such as the SuiteLinksBar ( part of the Office 365 header) which comes from Portal.office.com (Portal) (8).

Sad Days

Ah.. that is how it should work, and it does for 8 to 12 hours.

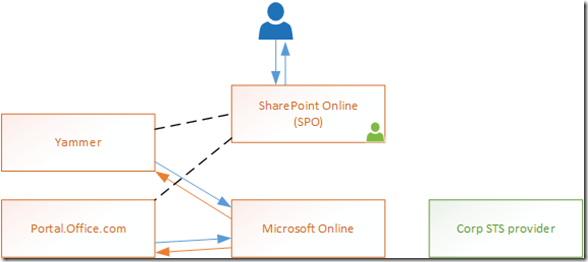

Another picture.

The user requests a SPO page. SPO still has an active identity so doesn’t redirect to MSO and just serves the page. This includes a Yammer feed. Yammer has forgotten who the caller is so redirects to MSO to find out. This is as per the happy day flow. But now the cookie that MSO held with the identity has expired. (That is an assumption, don’t repeat it as fact. Whether or not it is because the cookie expired or the token MSO received from Corp was given has ended it’s lease, the result is the same.) MSO does not know who is making the call. Here is where it goes bad.

This time instead of MSO redirecting the user to Corp it just returns to Yammer without an identity token and Yammer displays a sign in button. (A similar pattern happens for Portal)

This actually makes sense (hence by design) as MSO at that point doesn’t have control of the user interface so can’t really prompt the user or direct the user away. Bummer but that is how it is.

So why didn’t SPO’s identity expire? The SPO team, to keep the experience nice will keep their identity cookie fresh so the user isn’t repeatedly asked to sign-in. In fact all the services in this process do that. Yammer, SPO, the Portal and any other services or add-ins you’ve included on the site are the same. The problem is without the regular call backs to MSO the cookies on the frontend expire later than the MSO ones so some services will still load, others won’t.

Keeping MSO Identity Fresh

Is there anyway to keep MSO cookie/token fresh?

Yes, Microsoft have some documentation buried deep that has a solution but is almost impossible to find. But you can find it here, in section 6.4. The technique is called a “smart link”. The idea is simple, provide a short, really friendly URL, that redirects to the very unfriendly URL that SPO sends to MSO when there are no cookies present.

I was recently asked, if it really mattered since the user didn’t see anything different. YES it does, it makes a huge difference as I’ve just explained.

The smart link is the concept anyway. We don’t actually need to use the precise URL we use a smarter variant. The URL we want to smart link is:

https://login.microsoftonline.com/login.srf?wa=wsignin1.0&whr=contoso.com&wreply=https:%2f%2fcontoso%2esharepoint%2ecom%2f%5fforms%2fdefault%2easpx

You will need to adjust the highlighted parts for your environment. The WHR parameter tells MSO where the Corp AD FS server is. The WREPLY parameter tells MSO where to redirect once the identity has been confirmed.

By using this smart link as the browser home page, or by using it as the published link to access the SPO site you ensure that MSO first has a chance to revitalise its cookies/tokens and then when it is asked can provide the identity of the caller to any service that requests it.

There are some instances where this is probably not going to work, like someone who only accesses SPO by opening documents. But if this link is their home page then the chance of that is highly unlikely.

What about on premises

If you are on premises environment is setup to use Windows Authentication directly then it probably won’t work.

If you have Yammer SSO set to authenticate using an on premises AD FS server and have your SharePoint environment authenticating with the same AD FS environment then, yes you probably can use this technique. Just use fiddler to find the URL for your environment and you can smart link people to that and it should have the same result.

Conclusion

Complex problem, simple work around. That is all, my customer went from walking away from Yammer embedded feeds to embracing them more, all because they didn’t have to sign-in every time they wanted to see one.

SharePoint 2013 Ratings and Internet Explorer 10+

You may have noticed that the star ratings have changed in SharePoint 2013 to utilise the theme of the site, or you may not. If you haven’t seen them they look like this…

Well most of the time they look like that. But sometimes, when using IE10 or above, they look like this…

So what is going on here? After some looking at the code, decompiling, investigating it seems to be an sequencing problem. Here’s my breakdown.

The RatingFieldControl renders the following HTML:

<span id="RatingsCtrl_ctl00_PageOwnerRviewDateBar_..."></span>

<script type="text/javascript">

EnsureScript('SP.UI.Ratings.js', typeof(SP.UI.Reputation), function() {

// Do Stuff

});

</script>

<div style="display: none;">

<img id="RatingsLargeStarFilled.png" src="themedfile"></img>

<!-- other img tags for different parts of the ratings -->

</div>

Well obviously it is is a little more, I’ve distilled it down to the basics. The first span is filled in by the function included in the script tag. The last hidden div is used for the script to populate the image files that are required. And therein lies the problem.

When using fiddler to investigate the problem with rendering in IE10. I could see that the loading sequences when I pressed enter in the address bar vs pressing the refresh button vs using IE8/9 were different.

When the display bug occurs it appears that the EnsureScript, which is part of the Script on Demand libraries, executes immediately since SP.UI.Reputation is already loaded. This means that when the script goes to find the images it can’t find them because they haven’t been rendered to the page yet. The simple fix is for Microsoft to change the rendering sequence for their control, as there are private methods involved it isn’t possible for us to just overwrite the render method, and even if we did then our control wouldn’t be the one used in most cases.

There are two solutions I can think of:

1. Calculate the themed source files and specify the images yourself. This is probably not ideal as a change in the theme will change the files you want to point to, and these can’t be predicted.

2. Re-execute the script after the page has completed rendered to the browser.

I’m going to look at using option 2:

Without going into full details, here is the solution add this JavaScript to the page (I already have jQuery library loaded):

$(function () {

if (SP.UI.Reputation != null) {

SP.UI.Reputation.RatingsHelpers.$c(false);

$('[id^=RatingsCtrl_] ~ script').each(function() {

eval($(this).text());

});

}

});

How does this solve the problem?

The first line is shorthand for wait for the document.ready event so ensures the script isn’t loaded until after the page has finished loading.

The IF statement detects if the SP.UI.Ratings.js. I could use the EnsureScript here as well, but by checking this way, if the script is still waiting to be loaded then the emitted script above will still be waiting to execute so we won’t need our little hack.

The function line 3 calls the RatingsHelper method responsible for the reading of the img tags for use by the script and thus forces these to be reloaded.

The last block finds, using jQuery selectors, the script blocks associated with the Ratings controls and executes these statements again. Forcing the display of the star ratings to be updated correctly.

And there you have it, the simplest hack I could find to correct what could easily be done by Microsoft switching a few lines of code around.

Automating SharePoint build and deploy #NZSPC

This year I presented at the New Zealand SharePoint conference how to build and deploy SharePoint solutions automatically with TFS. Here is the presentation.

New Zealand SharePoint Conference

I just thought I’d confirm here that I’ll be speaking at the New Zealand SharePoint Conference this year in April, make sure you grab your tickets now.

TFSVersioning

So awhile ago I mentioned automated versioning of components during a TFS Build. At the time I suggested Chris O’Brien’s approach, which is a good simple approach. However, if you want something a bit more powerful take a look at TSVersioning.

TFSVersioning is a codeplex project that has heaps of versioning goodness and good documentation to match.

It is really easy to add to build process just following the instructions they have given.

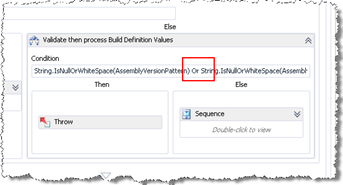

When using this with SharePoint projects the only limitation I found was that you needed to apply the Assembly Version Pattern which obviously you don’t want to do with SharePoint. This appears to be a limitation in one of the embedded XAML files.

If you want to remove this limitation then just do the following. Download the source code (I’m using the 1.5.0.0 version.) and open the solution in Visual Studio.

Find the VersionAssemblyInfoFiles.xaml file and open up the designer.

Find the node that task that says “Validate then process Build Definition Values”. Chagne the “Or” in the condition to an “And” so it reads “String.IsNullOrWhiteSpace(AssemblyVersionPattern) And String.IsNullOrWhiteSpace(AssemblyFileVersionPattern)”.

Compile the project and use the DLL instead of the one included in the package.

The other XAML and flows already allow you to specify either the AssemblyFileVersionPattern or AssemblyVersionPattern, just not this flow.

Note: Version 2 has been released for TFS2012, I do not know if that version has this limitation or not.

Automating SharePoint build and deployment–Part 4 : Putting it together

This is the fourth, and probably final, in a multipart post about automating the build and deployment of SharePoint solutions. The other blog posts are:

In this post we will put all these bits together to form one process from check-in to deployed solution. Again as in the rest of this series this post is going focus on the principles behind this task, and how we went about it. It is not going to give any code.

The parts

So far we have built an automatic deployment script, got automated builds happening that build the package for deployment. Now we need to get the automated build triggering a deployment into a staging or test environment.

We could use the lab management features of TFS Team Build to handle this, and that would be good way of going. But the approach we decided to use was chosen as the only dependant tool is PowerShell, the other active components can be replaced with anything that will do that task, which there are a few to choose from.

For our team, in order to leverage the work that had been done by other teams just before us we decided to use TFS Deployer to launch the deployment based on changes to the build quality.

PowerShell Remoting

In order to allow TFS Deployer to do its job we need to enable PowerShell remoting from the build server (as the client) and our target SharePoint server (as the server).

To setup the SharePoint server open an elevated PowerShell prompt and enter the following commands:

Enable-PSRemoting -force Set-Item WSMan:\localhost\Shell\MaxMemoryPerShellMB 1000 Enable-WSManCredSSP -Role Server

This script enables remoting, increases the size of the memory available and then allows the server to delegate authentication to another server. This enables our deployment script to issue commands on other parts of the farm if required.

The build server is our client so there is a little more work to do from the client.

# Enable Powershell remoting on server $SharePointServer = "SharePointServer" Enable-PSRemoting -force Set-Item -force WSMan:\localhost\Client\TrustedHosts $SharePointServer Restart-Service winrm # Enable client to delegate credentials to the a server Enable-WSManCredSSP –Role Client –DelegateComputer $SharePointServer #Also need to edit Group Policy to enable Credential Delegation

Further information on this entire process can be found at Keith Hill’s blog http://rkeithhill.wordpress.com/2009/05/02/powershell-v2-remoting-on-workgroup-joined-computers-%E2%80%93-yes-it-can-be-done/.

TFS Deployer – The agent in the middle

TFS Deployer is the tool we have chosen, it is not the only one, to detect the change in the Build Quality and launch the scripted deployment on the chosen environment.

TFS Deployer had got a good set of instructions on their project site. To simply our environments we run TFS Deployer on the build server.

In order to simplify the process for our many clients and projects we have TFS Deployer call a generic script that first copies the project specific deployment, after interrogating the $TfsDeployerBuildDetail object for the location of the files. It then calls that deployment script to execute the deployment.

The project specific script file is also reasonably generic. It first copies the packaged output (see previous posts) to the target server. It then remotely extracts the files and executes the deployment scripts from inside that package. Of course, in this scenario we know all the parameters that need to be passed so we can conveniently bypass any prompting by supplying all the parameters needed.

Conclusions

That ends the series. Unfortunately it has been code light, as I was working through the process and trying to capture the philosophies rather than the actual mechanism. Hopefully you have learnt something from reading these posts and picked up a few tips along the way.

As stated in the first post the purpose of this series was to put together and understand our build framework and requirements so that I could build a new version using the TFS Build workflow mechanism. I believe I now have that understanding, so I can start that work. Perhaps doing that work will lead to a few more blog posts in the future.

Automating SharePoint Build and Deployment–Part 3

This is the third in a multipart post about automating the build and deployment of SharePoint solutions. The other blog posts are:

In this post we will look at the build itself. Again as in the rest of this series this post is going focus on the principles behind this task, and how we went about it. It is not going to give any code.

Why?

If we are doing SharePoint solutions, surely a quick build from the Visual Studio environment, and a package from there, will produce the correct output for us. Why should we go through the pain of automating the build process?

That is a very good question. Here are a few reasons:

- Ensure that the code can be built consistently from any development machine

- Ensure that everything required for the build are in the source control repository.

- Ensure that what is in source control builds.

- Ensure regular testing of the build process.

- Ensure rapid resolution of a broken build.

But of course it comes back to down to the big three we mentioned in a previous post: Simplify, Repeatable and Reliable.

Simplify – Because in this case we are simplifying the work we need to do, and the associated documentation required.

Repeatable – Because we need to be able to repeat the process. Maybe the build won’t be built again for a year, but we need to ensure that when that time comes we can do it.

Reliable – Because as long as it builds the output is known to be built the same way, and in the same order.

But the most important reason of all. It is not hard to set up.

What?

The build process only needs to do one thing. Change the source code to the deployment package we talked about in the previous post.

One of the advantages of automating everything is that we can ensure that all parts in the process are tagged and labelled so that we can find the exact code that was used to generate the components running in production.

In order to do that we are going to need the following actions performed:

- Automatic increment of a version number.

- Labelling the source code with the above version number.

- Stamping of all components with the build name, and version number.

- Build of the source code

- Package the built binaries into WSP packages, for SharePoint.

- Package of the WSPs, and our Deployment scripts into the package.

That seems like a lot, but as you will see most of that can be performed by the Team Foundation Build services.

How? – Building the solution

Team Foundation Server (and the service offering) make this a really easy task. From Team Explorer just expand the build node, right click, select “create a new build definition…” and follow the wizard.

There are a few extra things that you will need to do to build SharePoint projects though:

- SharePoint assemblies need to be on the build agent. Chris O’Brien has this covered in his post Creating your first TFS Build Process for SharePoint projects. While not recommend to install SharePoint on the build server, we have installed the binaries on the server but NOT configured it as a farm, which would kill the performance of the server. We also install Visual Studio, but this again is not necessary or advisable. I suggest that you follow the How to Build SharePoint Projects with TFS Team Build on MSDN as that provides good alternatives and clearly lays out the process.

- /p:IsPackaging=True needs to be added to the MSBuild arguments parameter so that TFS will tell MSBuild to also perform the SharePoint packaging tasks and create the WSPs.

How? – Versioning

Every .Net assembly contains two version numbers: the assembly version, which is used in the strong name and thus needs to be bound into a lot of the element.xml files. And the file version number, which is just that a number.

Therefore for our purposes the file version number is adequate, not to mention less complex to implement, to track the running components back to the source code.

We will also need to use the version number as part, or all, of the build number and ensure that the source code repository is labelled with the build number as well. Fortunately TFS Build already performs these actions for us.

Chris O’Brien has a simple workflow extension, and instructions, based on another derivative work. For further information and for how to extend the TFS build template to include this versioning see part 6 of his continuous integration series.

How? – Packaging

Once the build has done the work of building the assemblies and the SharePoint packages the next step is to package these artefacts into the deployment package we mentioned in the previous post.

The team I was part of did this in the old TFS 2005 format (which used MSBuild) by extending the PackageBinaries target. In addition we were able to separate the definition of the package from the creation of the package by using MSBuild include files. This has made the solution developed incredibly easy to implement and highly reusable even though it is in the MSBuild format.

To integrate this with the newer TFS build workflows we just need to modify the build workflow to call this target at the appropriate place, after the components have been built.

The process for packaging is really quite simple:

1. Build the package in a staging directory structure by copying the output files from the appropriate place.

2. Zip up the staging directory.

3. Ensure that the zip file is included as a build output.

Last words

So now we have talked about the overall strategy, the deployment package and how we create the deployment package. In the next post we will tie all the parts together to show how we can get all the way from the code to the running SharePoint server, every, or almost every, time that someone checks in some code.

Automating SharePoint Build and Deployment – Part 2: Deployment

In the first part of this series we introduced you to the concept of automated deployment and the three parts of our build and deployment framework: Build, Package and Deploy.

This post is about the Deploy process, what it looks and what we learnt.

Advantages

While SharePoint has a mechanism for the deployment of solutions and features onto the platform. There are many advantages to automating the deployment of solutions into SharePoint. Lets have a quick look at what these advantages are:

1. Removal of manual steps

Installing a solution into SharePoint requires:

- The installation of the solution (WSP) itself, from a command line using either STSADM or PowerShell.

- The Deployment of the solution, from PowerShell or Central Admin.

- Activation of the features, at Farm, Web App, Site Collection or Site level, using a combination of site administration, central administration or PowerShell.

Thus a standard deployment can have pages of manual steps to be followed. But as we can perform all of these tasks using PowerShell we should be able to build a script which can be used for the complete deployment of the solution.

2. Simplify documentation

This leads on from the first point. With the removal of all the manual steps we can now produce less documentation.

3. More reliable deployment

Also following on from the first point. With the removal of the manual steps we by default get a more consistent and therefore more reliable deployment.

Principles

There are a number of principles we want to encapsulate in the deployment package.

- Simplicity – For the people using the package it should be as simple as unzipping it and double clicking a icon.

- Agnostic – The deployment package should be able to be used in multiple environments without modification.

- Self Aware – The deployment package should be able to detect what has previously been deployed and take appropriate action. i.e. upgrade vs fresh install.

- Reusable – The package should be able to be reused in multiple mechanisms, i.e. manually triggered installation versus automatically triggered.

In addition any framework we put together to help with the deployment we would like to be able to take from project to project with only configuration changes.

The Package

The build and deploy framework we use provides the ability for us to build any type of package we need to deploy the project, could be MSIs, MSBuild scripts, PowerShell, Batch File anything, as long as it is all self contained or relies on already installed built in commands.

The framework also delivers pre-built templates for BizTalk, Databases, IIS, COMPlus, SSRS and Windows services any of which can be combined and utilised together. However, there was no reusable template for deployment of SharePoint solutions. So we had to create one to add to the framework. We decided to use PowerShell for the installation script as we could leverage not only the SharePoint CommandLets but also the standard SharePoint .NET components. As a side, a lot of the other templates heavily utilise a custom task for executing PowerShell code from the MSBuild script, so we thought it was time we challenged that approach.

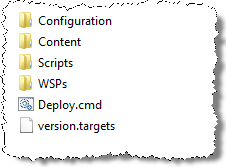

The first thing we want to do is decide on a structure for the package. In order to keep with the simplicity principle, and to keep it inline with the patterns already in the framework, it is preferable to have the root of the package uncluttered. (I should note here that while I was involved with the building of the framework the result was the amalgamation of work and ideas from multiple people and sources, I’m not attempting to take the credit from these people, even though I can’t remember their names.)

To the right is the structure that we settled with.

- Configuration contains the definitions and configuration for the package.

- Scripts contain PowerShell (and any other script support) required to execute the configuration instructions.

- WSPs contain the SharePoint solution packages

- Content contains some additional content required to be loaded into SharePoint during the installation, which would have otherwise been post implementation manual steps before the site would work.

In the root of the structure are just two files, the deploy.cmd for launching the installation and version.targets. Version.targets is an artefact created by our build process this has two purposes:

- It can be referred to during deployment.

- If the zip file is renamed, we can still determine the version without digging down to the assemblies in the package.

Need good boot strapping (Simplicity)

The Deploy.cmd file calls a deploy.ps1 file which then calls a install.ps1 file. This long chain was necessary and each file has its purpose.

Deploy.cmd performs three functions:

- Check for the existence of PowerShell v2.0

- Ensures that the appropriate Execution policy is set

- Launches deploy.ps1

- Originally this script also prompted the user for the action they wanted to take. But we found that the repeated prompts for the web app that the solution was to be deployed to became a bit cumbersome so we delayed the menu to the deploy.ps1.

Deploy.cmd means that the person doing the install can just right-click and select run as administrator. There is no need for them to open up the a particular PowerShell window, navigate to the right path, type the right command etc. It is just a right-click.

Deploy.ps1 performs three key tasks which were easier to do with PowerShell than in the command prompt:

- Check the farm version is at or higher than what we have built for.

- Prompt the user for the Web Application to deploy to, based on the web applications available.

- Prompt the user for what action to perform.

- Call the appropriate scripts to perform the requested action.

Install.ps1 performs the main deployment operations. It is therefore at this point that we encountered most of our problems. We also use this script as our entry point for automatically triggered deployments as this script does not, if all parameters are supplied, prompt the user for any information.

PowerShell runs the Deactivate code (Simplicity)

When PowerShell is used to deactivate a feature any custom deactivation code is run in the PowerShell instance, not on a timer job. This means that the assembly holding the custom code is loaded into that instance, and there is the problem. Once an assembly has been loaded into a PowerShell instance it isn’t possible to remove the assembly without unloading the whole PowerShell runtime.

To get around this we separated Uninstall from Install and call the Install.ps1 twice – once for each action – from the deploy.ps1 script.

There may be other ways to get around this:

- Version the assemblies for every release, this seems excessive in a SharePoint environment where the assembly version needs to be coded in a number of places. Sure there are ways to tokenise this but it hasn’t been implemented universally in Visual Studio so it is still awkward to do.

- Use the upgrade instead. This would work but unless you are versioning your assemblies this seems difficult and in a CI type environment determining which version you are upgrading from isn’t always straight forward. Again SharePoint hasn’t made this easy.

Log everything

Frequently errors in the installation that are reported are the result of an unreported error further up, or incorrect choices being made by the installer. These are sometimes avoidable in the case of human being involved but could point to corrections needing to be made in our scripts in the case where humans aren’t the cause.

To help determine which case the error falls into if you can log everything then you are a long way toward diagnosing the fault. PowerShell helps out here with some useful built in transcription commands. We use these at the start every one of our scripts so that all output is recorded for us in RTF files.

In addition to the standard output from the script we also output some other useful information like all the contents of all the parameters that were used to invoke the script. It helps.

We found these logs were unnecessary when created from an automatically triggered build as the output from these were packaged and sent via email by the tools we were using, so we added an extra parameter to the scripts to supress logging in these scenarios. We obviously don’t set that parameter for manually triggered deployments.

Test before execution (Self aware)

In the scripts there are multiple steps that can cause errors. Such as removal of an solution that has not been retracted, deactivation of a deactivated feature. Usually these conditions are testable before execution and can be worked around if we are aware of them, i.e. don’t deactivate the feature if it is already deactivated, retract the solution, if it isn’t already. Thus each part of our script generally tests before executing rather than trying to trap the error and report it erroneously.

Separate Configuration from Scripts (Reusable)

To make the scripts reusable across solutions and customers, the scripts perform actions based on XML configuration files in the Configuration folder. This means that we can take the same scripting process and apply it to the next set of solutions that we do. This is by far easier than modifying a large script of utilities every time we need a slightly different but similar deployment.

Farm Aware (Agnostic)

There are a number of instances when doing SharePoint installs that IIS, the SharePoint timer or admin services need to be restarted. In a multi-server farm this action needs to be done all servers, not just the one you are executing the scripts on. This means that your scripts need to detect the servers in the farm and perform the reset on all of them, where appropriate.

Last words

All the lessons above actually push us towards the principles listed above, I’ve marked each lesson with the principle it is associated with.

For those that are disappointed because I did not to show any of the scripts we used, tough! The post was getting to long without showing the code. What you can do though is keep your eyes on this blog as I’ll post some of these tricks at a later stage, most of them you can find online if you look anyway, so you aren’t missing anything big.

The next post will talk about how we get the build process to build this package for us.

Automating SharePoint Build and Deployment–part 1

Over the last twelve years of my career I’ve been part of a service delivery company that delivers software, both bespoke and packaged, to hundreds of customers every year. In all of these projects there are some common tasks and frameworks that we have created to build and deploy our products. In so doing it has improved the quality of the products we deliver, so I think it is worth sharing my experiences with building them.

This article, and the ones following, aren’t about those frameworks. I’m not going to tell you how they work, and I’m not going to give you the code (so don’t ask). This is because of a number of factors, but the key one is, it is out of date and it is using deprecated features of the current versions of Microsoft Team Foundation Server (TFS) and other tools.

This series is going to be about the guiding principles and techniques we have used to extend this framework so that we can do automated builds, and deployments, with SharePoint. I’m hoping that, 1. we can understand what we have so we can update it and 2. that you, the reader, can improve your environment using these thoughts.

The toolset

Before I continue it is probably worth mentioning the tools we have used to build our framework, even though the principles in this series can and should be applied to any other tools that perform a similar function.

- Microsoft Team Foundation Server – this is used primarily as a source control repository but the TFSBuild components are critical to in the build stage, which I’ll leave for the subject of another post.

- TFS Deployer (http://tfsdeployer.codeplex.com/) – this is a codeplex project that monitors the builds happening in TFS and triggers scripts based on changes to the build quality, result, etc.

- MSBuild – Visual Studio, and obviously TFSBuild, use this behind the scenes to do the actual compilation of the code. We use this to package the build output and sometimes as the scripting language for the deployment, again I’ll leave that subject for another post.

- PowerShell – The ultimate scripting language for managing Microsoft technologies and products.

- SharePoint 2010 – Ok so I almost forgot that one. The fact that we deploy against this does not mean that we cannot use a different version. It is just that from 2010 SharePoint had really useful PowerShell commandlets to help. SP 2013 will work just as well using this broad approach, MOSS 2007 will require some changes on the Deployment step.

- You probably noticed that these are all either Microsoft technologies or built on Microsoft technologies. That is because I work in the Microsoft Solutions team, and also because the point of this series is deploying to SharePoint (which is itself a Microsoft product). This doesn’t mean you can’t substitute other tools but we have chosen to use this set.

Why automate?

It is important to define the goals behind this automated process. Otherwise we could end up automating everything but achieving nothing instead of focusing on the important areas to automate first.

The goals are simple:

- Save time – No one likes doing the same thing over and over.

- Reduce errors – If it is automated there is less chance of forgetting something or doing it wrong.

- Save cost – By achieving a time saving and reducing the rework from errors we’ll automatically save costs. However, the time taken to introduce the framework to the next project that uses will also save costs, which can be directly passed onto the customers.

How and what are we automating?

The short version is that we are automating everything to do with building and releasing the software. As soon as a developer checks in some code we want to be able to have that built, deployed onto a SharePoint farm and tested, before we tell the tester that they can have a look.

At least that is the dream. To realise this dream, and I haven’t quite got there yet, we have to break down the problem into small tasks that can be automate it. Automating the entire process will be accomplished by building smaller automated pieces and then automating the running of these smaller pieces.

So lets break it down a little, which will also guide the structure for the rest of this series.

After many attempts at defining the parts of the framework we have settled on three broad areas for automating, these are:

- Build – The building of the source code, versioning the assembly outputs, signing etc.

- Package – The packaging of the build outputs into something that can be deployed.

- Deploy – The deployment of the package created in the above step.

- The main objective is to automate the deployment post shipment. Thereby reducing the length of time it takes to write installation instructions, but also to reduce the errors that invariably occur when the Infrastructure people attempt to install our software, and if an error occurs, then reduce the time it takes to determine what where it went wrong.

- Therefore all the steps work toward meeting this objective, the first two steps to build and create the package, the third tests it before shipment, and controls the release post shipment.

As in order to define the package we need to know what the deployment looks like we will start by investigating what we need to deploy SharePoint solutions. And then as we progress through the series work backwards through the package and build steps.

There are of course other approaches for doing this, in fact, when we come to update our framework we will probably lean heavily upon them. The most notable is Chris O’Brien’s series on SharePoint 2010 Continuous Integration, however our framework does some things differently so we will need to make some changes to that approach.

But that is enough of an introduction, in the next post we will look at the structure of the deployment package.

AD FS next steps…

Once you have AD FS setup with SharePoint there are some other considerations that you may want to consider, these are the ones that I have considered when installing for a client recently.

Logout

When the user logs out from the SharePoint site the user is not logged out of AD FS. This may or may not be a problem but needs to be considered. There are two options:

1. Disable Single Sign-on in AD FS. To do this you will need to modify the web.config for the AD FS installation, see SharePoint and AD FS Part 2, and look for the microsoft.identityServer.web node. In there you will find a singleSignOn property. Change this value to false. This method has the disadvantage of then not being able to sign on once to the organisation if there are multiple web sites the user can browse. This may or not be an issue.

2. Modify the logout in SharePoint so it logs out of AD FS. Shailen Sukul has an excellent example of this method here.

Adding another Web application

The point will invariably come when another web application from the SharePoint farm will need to use the same AD FS instance. Steve Peschka has an excellent blog post “How to Create Multiple Claims Auth Web Apps in a Single SharePoint 2010 Farm” explaining how to do this. The only thing that isn’t clear in his post is how to get the $ap variable populated if you already have it registered. This is simple though, if you have only one token issuer registered then the following line will get it for you.

$ap = Get-SPTrustedIdentityTokenIssuer

If you have more than one you can use

$ap = Get-SPTrustedIdentityTokenIssuer –Identity "name of issuer"

to the end of the line to resolve it.